12. The Java I/O System▲

Creating a good input/output (I/O) system is one of the more difficult tasks for the language designer.

This is evidenced by the number of different approaches. The challenge seems to be in covering all eventualities. Not only are there different sources and sinks of I/O that you want to communicate with (files, the console, network connections, etc.), but you need to talk to them in a wide variety of ways (sequential, random-access, buffered, binary, character, by lines, by words, etc.).

The Java library designers attacked this problem by creating lots of classes. In fact, there are so many classes for Java's I/O system that it can be intimidating at first (ironically, the Java I/O design actually prevents an explosion of classes). There was also a significant change in the I/O library after Java 1.0, when the original byte-oriented library was supplemented with char-oriented, Unicode-based I/O classes. In JDK 1.4, the nio classes (for "new I/O," a name we'll still be using years from now) were added for improved performance and functionality. As a result, there are a fair number of classes to learn before you understand enough of Java's I/O picture that you can use it properly. In addition, it's rather important to understand the evolution history of the I/O library, even if your first reaction is "don't bother me with history, just show me how to use it!" The problem is that without the historical perspective, you will rapidly become confused with some of the classes and when you should and shouldn't use them.

This chapter will give you an introduction to the variety of I/O classes in the standard Java library and how to use them.

12-1. The File class▲

Before getting into the classes that actually read and write data to streams, we'll look at a utility provided with the library to assist you in handling file directory issues.

The File class has a deceiving name; you might think it refers to a file, but it doesn't. It can represent either the name of a particular file or the names of a set of files in a directory. If it's a set of files, you can ask for that set using the list( ) method, which returns an array of String. It makes sense to return an array rather than one of the flexible container classes, because the number of elements is fixed, and if you want a different directory listing, you just create a different File object. In fact, "FilePath" would have been a better name for the class. This section shows an example of the use of this class, including the associated FilenameFilter interface.

12-1-1. A directory lister▲

Suppose you'd like to see a directory listing. The File object can be listed in two ways. If you call list( ) with no arguments, you'll get the full list that the File object contains. However, if you want a restricted list-for example, if you want all of the files with a n extension of .java-then you use a "directory filter," which is a class that tells how to select the File objects for display.

Here's the code for the example. Note that the result has been effortlessly sorted (alphabetically) using the java.utils.Arrays.sort( ) method and the AlphabeticComparator defined in Chapter 11:

//: c12:DirList.java

// Displays directory listing using regular expressions.

// {Args: "D.*\.java"}

import java.io.*;

import java.util.*;

import java.util.regex.*;

import com.bruceeckel.util.*;

public class DirList {

public static void main(String[] args) {

File path = new File(".");

String[] list;

if(args.length == 0)

list = path.list();

else

list = path.list(new DirFilter(args[0]));

Arrays.sort(list, new AlphabeticComparator());

for(int i = 0; i < list.length; i++)

System.out.println(list[i]);

}

}

class DirFilter implements FilenameFilter {

private Pattern pattern;

public DirFilter(String regex) {

pattern = Pattern.compile(regex);

}

public boolean accept(File dir, String name) {

// Strip path information, search for regex:

return pattern.matcher(

new File(name).getName()).matches();

}

} ///:~

The DirFilter class "implements" the interface FilenameFilter. It's useful to see how simple the FilenameFilter interface is:

public interface FilenameFilter {

boolean accept(File dir, String name);

}

It says all that this type of object does is provide a method called accept( ). The whole reason behind the creation of this class is to provide the accept( ) method to the list( ) method so that list( ) can "call back" accept( ) to determine which file names should be included in the list. Thus, this structure is often referred to as a callback. More specifically, this is an example of the Strategy Pattern, because list( ) implements basic functionality, and you provide the Strategy in the form of a FilenameFilter in order to complete the algorithm necessary for list( ) to provide its service. Because list( ) takes a FilenameFilter object as its argument, it means that you can pass an object of any class that implements FilenameFilter to choose (even at run time) how the list( ) method will behave. The purpose of a callback is to provide flexibility in the behavior of code.

DirFilter shows that just because an interface contains only a set of methods, you're not restricted to writing only those methods. (You must at least provide definitions for all the methods in an interface, however.) In this case, the DirFilter constructor is also created.

The accept( ) method must accept a File object representing the directory that a particular file is found in, and a String containing the name of that file. You might choose to use or ignore either of these arguments, but you will probably at least use the file name. Remember that the list( ) method is calling accept( ) for each of the file names in the directory object to see which one should be included; this is indicated by the boolean result returned by accept( ).

To make sure the element you're working with is only the file name and contains no path information, all you have to do is take the String object and create a File object out of it, then call getName( ), which strips away all the path information (in a platform-independent way). Then accept( ) uses a regular expression matcher object to see if the regular expression regex matches the name of the file. Using accept( ), the list( ) method returns an array.

12-1-1-1. Anonymous inner classes▲

This example is ideal for rewriting using an anonymous inner class (described in Chapter 8). As a first cut, a method filter( ) is created that returns a reference to a FilenameFilter:

//: c12:DirList2.java

// Uses anonymous inner classes.

// {Args: "D.*\.java"}

import java.io.*;

import java.util.*;

import java.util.regex.*;

import com.bruceeckel.util.*;

public class DirList2 {

public static FilenameFilter filter(final String regex) {

// Creation of anonymous inner class:

return new FilenameFilter() {

private Pattern pattern = Pattern.compile(regex);

public boolean accept(File dir, String name) {

return pattern.matcher(

new File(name).getName()).matches();

}

}; // End of anonymous inner class

}

public static void main(String[] args) {

File path = new File(".");

String[] list;

if(args.length == 0)

list = path.list();

else

list = path.list(filter(args[0]));

Arrays.sort(list, new AlphabeticComparator());

for(int i = 0; i < list.length; i++)

System.out.println(list[i]);

}

} ///:~

Note that the argument to filter( ) must be final. This is required by the anonymous inner class so that it can use an object from outside its scope.

This design is an improvement because the FilenameFilter class is now tightly bound to DirList2. However, you can take this approach one step further and define the anonymous inner class as an argument to list( ), in which case it's even smaller:

//: c12:DirList3.java

// Building the anonymous inner class "in-place."

// {Args: "D.*\.java"}

import java.io.*;

import java.util.*;

import java.util.regex.*;

import com.bruceeckel.util.*;

public class DirList3 {

public static void main(final String[] args) {

File path = new File(".");

String[] list;

if(args.length == 0)

list = path.list();

else

list = path.list(new FilenameFilter() {

private Pattern pattern = Pattern.compile(args[0]);

public boolean accept(File dir, String name) {

return pattern.matcher(

new File(name).getName()).matches();

}

});

Arrays.sort(list, new AlphabeticComparator());

for(int i = 0; i < list.length; i++)

System.out.println(list[i]);

}

} ///:~

The argument to main( ) is now final, since the anonymous inner class uses args[0] directly.

This shows you how anonymous inner classes allow the creation of specific, one-off classes to solve problems. One benefit of this approach is that it keeps the code that solves a particular problem isolated together in one spot. On the other hand, it is not always as easy to read, so you must use it judiciously.

12-1-2. Checking for and creating directories▲

The File class is more than just a representation for an existing file or directory. You can also use a File object to create a new directory or an entire directory path if it doesn't exist. You can also look at the characteristics of files (size, last modification date, read/write), see whether a File object represents a file or a directory, and delete a file. This program shows some of the other methods available with the File class (see the HTML documentation from java.sun.com for the full set):

//: c12:MakeDirectories.java

// Demonstrates the use of the File class to

// create directories and manipulate files.

// {Args: MakeDirectoriesTest}

import com.bruceeckel.simpletest.*;

import java.io.*;

public class MakeDirectories {

private static Test monitor = new Test();

private static void usage() {

System.err.println(

"Usage:MakeDirectories path1 ...\n" +

"Creates each path\n" +

"Usage:MakeDirectories -d path1 ...\n" +

"Deletes each path\n" +

"Usage:MakeDirectories -r path1 path2\n" +

"Renames from path1 to path2");

System.exit(1);

}

private static void fileData(File f) {

System.out.println(

"Absolute path: " + f.getAbsolutePath() +

"\n Can read: " + f.canRead() +

"\n Can write: " + f.canWrite() +

"\n getName: " + f.getName() +

"\n getParent: " + f.getParent() +

"\n getPath: " + f.getPath() +

"\n length: " + f.length() +

"\n lastModified: " + f.lastModified());

if(f.isFile())

System.out.println("It's a file");

else if(f.isDirectory())

System.out.println("It's a directory");

}

public static void main(String[] args) {

if(args.length < 1) usage();

if(args[0].equals("-r")) {

if(args.length != 3) usage();

File

old = new File(args[1]),

rname = new File(args[2]);

old.renameTo(rname);

fileData(old);

fileData(rname);

return; // Exit main

}

int count = 0;

boolean del = false;

if(args[0].equals("-d")) {

count++;

del = true;

}

count--;

while(++count < args.length) {

File f = new File(args[count]);

if(f.exists()) {

System.out.println(f + " exists");

if(del) {

System.out.println("deleting..." + f);

f.delete();

}

}

else { // Doesn't exist

if(!del) {

f.mkdirs();

System.out.println("created " + f);

}

}

fileData(f);

}

if(args.length == 1 &&

args[0].equals("MakeDirectoriesTest"))

monitor.expect(new String[] {

"%% (MakeDirectoriesTest exists"+

"|created MakeDirectoriesTest)",

"%% Absolute path: "

+ "\\S+MakeDirectoriesTest",

"%% Can read: (true|false)",

"%% Can write: (true|false)",

" getName: MakeDirectoriesTest",

" getParent: null",

" getPath: MakeDirectoriesTest",

"%% length: \\d+",

"%% lastModified: \\d+",

"It's a directory"

});

}

} ///:~

In fileData( ) you can see various file investigation methods used to display information about the file or directory path.

The first method that's exercised by main( ) is renameTo( ), which allows you to rename (or move) a file to an entirely new path represented by the argument, which is another File object. This also works with directories of any length.

If you experiment with the preceding program, you'll find that you can make a directory path of any complexity, because mkdirs( ) will do all the work for you.

12-2. Input and output▲

I/O libraries often use the abstraction of a stream, which represents any data source or sink as an object capable of producing or receiving pieces of data. The stream hides the details of what happens to the data inside the actual I/O device.

The Java library classes for I/O are divided by input and output, as you can see by looking at the class hierarchy in the JDK documentation. By inheritance, everything derived from the InputStream or Reader classes have basic methods called read( ) for reading a single byte or array of bytes. Likewise, everything derived from OutputStream or Writer classes have basic methods called write( ) for writing a single byte or array of bytes. However, you won't generally use these methods; they exist so that other classes can use them-these other classes provide a more useful interface. Thus, you'll rarely create your stream object by using a single class, but instead will layer multiple objects together to provide your desired functionality. The fact that you create more than one object to create a single resulting stream is the primary reason that Java's stream library is confusing.

It's helpful to categorize the classes by their functionality. In Java 1.0, the library designers started by deciding that all classes that had anything to do with input would be inherited from InputStream, and all classes that were associated with output would be inherited from OutputStream.

12-2-1. Types of InputStream▲

InputStream's job is to represent classes that produce input from different sources. These sources can be:

- An array of bytes.

- A String object.

- A file.

- A "pipe," which works like a physical pipe: You put things in at one end and they come out the other.

- A sequence of other streams, so you can collect them together into a single stream.

- Other sources, such as an Internet connection. (This is covered in Thinking in Enterprise Java.)

Each of these has an associated subclass of InputStream. In addition, the FilterInputStream is also a type of InputStream, to provide a base class for "decorator" classes that attach attributes or useful interfaces to input streams. This is discussed later.

Table 12-1. Types of InputStream

| Class | Function |

Constructor Arguments

How to use it |

|---|---|---|

| ByteArray-InputStream | Allows a buffer in memory to be used as an InputStream. | The buffer from which to extract the bytes. As a source of data: Connect it to a FilterInputStream object to provide a useful interface. |

| StringBuffer-InputStream | Converts a String into an InputStream. | A String. The underlying implementation actually uses a StringBuffer. As a source of data: Connect it to a FilterInputStream object to provide a useful interface. |

| File-InputStream | For reading information from a file. | A String representing the file name, or a File or FileDescriptor object. As a source of data: Connect it to a FilterInputStream object to provide a useful interface. |

| Piped-InputStream | Produces the data that's being written to the associated PipedOutput-Stream. Implements the "piping" concept. | PipedOutputStream As a source of data in multithreading: Connect it to a FilterInputStream object to provide a useful interface. |

| Sequence-InputStream | Converts two or more InputStream objects into a single InputStream. | Two InputStream objects or an Enumeration for a container of InputStream objects. As a source of data: Connect it to a FilterInputStream object to provide a useful interface. |

| Filter-InputStream | Abstract class that is an interface for decorators that provide useful functionality to the other InputStream classes. See Table 12-3. | See Table 12-3. See Table 12-3. |

12-2-2. Types of OutputStream▲

This category includes the classes that decide where your output will go: an array of bytes (no String, however; presumably, you can create one using the array of bytes), a file, or a "pipe."

In addition, the FilterOutputStream provides a base class for "decorator" classes that attach attributes or useful interfaces to output streams. This is discussed later.

Table 12-2. Types of OutputStream

| Class | Function |

Constructor Arguments

How to use it |

|---|---|---|

| ByteArray-OutputStream | Creates a buffer in memory. All the data that you send to the stream is placed in this buffer. | Optional initial size of the buffer. To designate the destination of your data: Connect it to a FilterOutputStream object to provide a useful interface. |

| File-OutputStream | For sending information to a file. | A String representing the file name, or a File or FileDescriptor object. To designate the destination of your data: Connect it to a FilterOutputStream object to provide a useful interface. |

| Piped-OutputStream | Any information you write to this automatically ends up as input for the associated PipedInput-Stream. Implements the "piping" concept. | PipedInputStream To designate the destination of your data for multithreading: Connect it to a FilterOutputStream object to provide a useful interface. |

| Filter-OutputStream | Abstract class that is an interface for decorators that provide useful functionality to the other OutputStream classes. See Table 12-4. | See Table 12-4. See Table 12-4. |

12-3. Adding attributes and useful interfaces▲

The use of layered objects to dynamically and transparently add responsibilities to individual objects is referred to as the Decorator pattern. (Patterns (61) are the subject of Thinking in Patterns (with Java) at www.BruceEckel.com.) The decorator pattern specifies that all objects that wrap around your initial object have the same interface. This makes the basic use of the decorators transparent-you send the same message to an object whether it has been decorated or not. This is the reason for the existence of the "filter" classes in the Java I/O library: The abstract "filter" class is the base class for all the decorators. (A decorator must have the same interface as the object it decorates, but the decorator can also extend the interface, which occurs in several of the "filter" classes).

Decorators are often used when simple subclassing results in a large number of classes in order to satisfy every possible combination that is needed-so many classes that it becomes impractical. The Java I/O library requires many different combinations of features, and this is the justification for using the decorator pattern. (62) There is a drawback to the decorator pattern, however. Decorators give you much more flexibility while you're writing a program (since you can easily mix and match attributes), but they add complexity to your code. The reason that the Java I/O library is awkward to use is that you must create many classes-the "core" I/O type plus all the decorators-in order to get the single I/O object that you want.

The classes that provide the decorator interface to control a particular InputStream or OutputStream are the FilterInputStream and FilterOutputStream, which don't have very intuitive names. FilterInputStream and FilterOutputStream are derived from the base classes of the I/O library, InputStream and OutputStream, which is the key requirement of the decorator (so that it provides the common interface to all the objects that are being decorated).

12-3-1. Reading from an InputStream with FilterInputStream▲

The FilterInputStream classes accomplish two significantly different things. DataInputStream allows you to read different types of primitive data as well as String objects. (All the methods start with "read," such as readByte( ), readFloat( ), etc.) This, along with its companion DataOutputStream, allows you to move primitive data from one place to another via a stream. These "places" are determined by the classes in Table 12-1.

The remaining classes modify the way an InputStream behaves internally: whether it's buffered or unbuffered, if it keeps track of the lines it's reading (allowing you to ask for line numbers or set the line number), and whether you can push back a single character. The last two classes look a lot like support for building a compiler (that is, they were probably added to support the construction of the Java compiler), so you probably won't use them in general programming.

You'll need to buffer your input almost every time, regardless of the I/O device you're connecting to, so it would have made more sense for the I/O library to make a special case (or simply a method call) for unbuffered input rather than buffered input.

Table 12-3. Types of FilterInputStream

| Class | Function |

Constructor Arguments

How to use it |

|---|---|---|

| Data-InputStream | Used in concert with DataOutputStream, so you can read primitives (int, char, long, etc.) from a stream in a portable fashion. | InputStream Contains a full interface to allow you to read primitive types. |

| Buffered-InputStream | Use this to prevent a physical read every time you want more data. You're saying "Use a buffer." | InputStream, with optional buffer size. This doesn't provide an interface per se, just a requirement that a buffer be used. Attach an interface object. |

| LineNumber-InputStream | Keeps track of line numbers in the input stream; you can call getLineNumber( ) and setLineNumber( int). |

InputStream This just adds line numbering, so you'll probably attach an interface object. |

| Pushback-InputStream | Has a one byte push-back buffer so that you can push back the last character read. | InputStream Generally used in the scanner for a compiler and probably included because the Java compiler needed it. You probably won't use this. |

12-3-2. Writing to an OutputStream with FilterOutputStream▲

The complement to DataInputStream is DataOutputStream, which formats each of the primitive types and String objects onto a stream in such a way that any DataInputStream, on any machine, can read them. All the methods start with "write," such as writeByte( ), writeFloat( ), etc.

The original intent of PrintStream was to print all of the primitive data types and String objects in a viewable format. This is different from DataOutputStream, whose goal is to put data elements on a stream in a way that DataInputStream can portably reconstruct them.

The two important methods in PrintStream are print( ) and println( ), which are overloaded to print all the various types. The difference between print( ) and println( ) is that the latter adds a newline when it's done.

PrintStream can be problematic because it traps all IOExceptions (You must explicitly test the error status with checkError( ), which returns true if an error has occurred). Also, PrintStream doesn't internationalize properly and doesn't handle line breaks in a platform-independent way (these problems are solved with PrintWriter, described later).

BufferedOutputStream is a modifier and tells the stream to use buffering so you don't get a physical write every time you write to the stream. You'll probably always want to use this when doing output.

Table 12-4. Types of FilterOutputStream

| Class | Function |

Constructor Arguments

How to use it |

|---|---|---|

| Data-OutputStream | Used in concert with DataInputStream so you can write primitives (int, char, long, etc.) to a stream in a portable fashion. | OutputStream Contains full interface to allow you to write primitive types. |

| PrintStream | For producing formatted output. While DataOutputStream handles the storage of data, PrintStream handles display. | OutputStream, with optional boolean indicating that the buffer is flushed with every newline. Should be the "final" wrapping for your OutputStream object. You'll probably use this a lot. |

| Buffered-OutputStream | Use this to prevent a physical write every time you send a piece of data. You're saying "Use a buffer." You can call flush( ) to flush the buffer. | OutputStream, with optional buffer size. This doesn't provide an interface per se, just a requirement that a buffer is used. Attach an interface object. |

12-4. Readers & Writers▲

Java 1.1 made some significant modifications to the fundamental I/O stream library. When you see the Reader and Writer classes, your first thought (like mine) might be that these were meant to replace the InputStream and OutputStream classes. But that's not the case. Although some aspects of the original streams library are deprecated (if you use them you will receive a warning from the compiler), the InputStream and OutputStream classes still provide valuable functionality in the form of byte-oriented I/O, whereas the Reader and Writer classes provide Unicode-compliant, character-based I/O. In addition:

- Java 1.1 added new classes into the InputStream and OutputStream hierarchy, so it's obvious those hierarchies weren't being replaced.

- There are times when you must use classes from the "byte" hierarchy in combination with classes in the "character" hierarchy. To accomplish this, there are "adapter" classes: InputStreamReader converts an InputStream to a Reader and OutputStreamWriter converts an OutputStream to a Writer.

The most important reason for the Reader and Writer hierarchies is for internationalization. The old I/O stream hierarchy supports only 8-bit byte streams and doesn't handle the 16-bit Unicode characters well. Since Unicode is used for internationalization (and Java's native char is 16-bit Unicode), the Reader and Writer hierarchies were added to support Unicode in all I/O operations. In addition, the new libraries are designed for faster operations than the old.

As is the practice in this book, I will attempt to provide an overview of the classes, but assume that you will use the JDK documentation to determine all the details, such as the exhaustive list of methods.

12-4-1. Sources and sinks of data▲

Almost all of the original Java I/O stream classes have corresponding Reader and Writer classes to provide native Unicode manipulation. However, there are some places where the byte-oriented InputStreams and OutputStreams are the correct solution;in particular, the java.util.zip libraries are byte-oriented rather than char-oriented. So the most sensible approach to take is to try to use the Reader and Writer classes whenever you can, and you'll discover the situations when you have to use the byte-oriented libraries, because your code won't compile.

Here is a table that shows the correspondence between the sources and sinks of information (that is, where the data physically comes from or goes to) in the two hierarchies.

|

Sources & Sinks:

Java 1.0 class |

Corresponding Java 1.1 class |

|---|---|

| InputStream | Reader adapter: InputStreamReader |

| OutputStream | Writer adapter: OutputStreamWriter |

| FileInputStream | FileReader |

| FileOutputStream | FileWriter |

| StringBufferInputStream | StringReader |

| (no corresponding class) | StringWriter |

| ByteArrayInputStream | CharArrayReader |

| ByteArrayOutputStream | CharArrayWriter |

| PipedInputStream | PipedReader |

| PipedOutputStream | PipedWriter |

In general, you'll find that the interfaces for the two different hierarchies are similar if not identical.

12-4-2. Modifying stream behavior▲

For InputStreams and OutputStreams, streams were adapted for particular needs using "decorator" subclasses of FilterInputStream and FilterOutputStream. The Reader and Writer class hierarchies continue the use of this idea-but not exactly.

In the following table, the correspondence is a rougher approximation than in the previous table. The difference is because of the class organization; although BufferedOutputStream is a subclass of FilterOutputStream, BufferedWriter is not a subclass of FilterWriter (which, even though it is abstract, has no subclasses and so appears to have been put in either as a placeholder or simply so you wouldn't wonder where it was). However, the interfaces to the classes are quite a close match.

|

Filters:

Java 1.0 class |

Corresponding Java 1.1 class |

|---|---|

| FilterInputStream | FilterReader |

| FilterOutputStream | FilterWriter (abstract class with no subclasses) |

| BufferedInputStream | BufferedReader (also has readLine( )) |

| BufferedOutputStream | BufferedWriter |

| DataInputStream | Use DataInputStream (except when you need to use readLine( ),when you should use a BufferedReader) |

| PrintStream | PrintWriter |

| LineNumberInputStream (deprecated) |

LineNumberReader |

| StreamTokenizer | StreamTokenizer (use constructor that takes a Reader instead) |

| PushBackInputStream | PushBackReader |

There's one direction that's quite clear: Whenever you want to use readLine( ), you shouldn't do it with a DataInputStream (this is met with a deprecation message at compile time), but instead use a BufferedReader. Other than this, DataInputStream is still a "preferred" member of the I/O library.

To make the transition to using a PrintWriter easier, it has constructors that take any OutputStream object as well as Writer objects. However, PrintWriter has no more support for formatting than PrintStream does; the interfaces are virtually the same.

The PrintWriter constructor also has an option to perform automatic flushing, which happens after every println( ) if the constructor flag is set.

12-4-3. Unchanged Classes▲

Some classes were left unchanged between Java 1.0 and Java 1.1:

| Java 1.0 classes without corresponding Java 1.1 classes |

|---|

| DataOutputStream |

| File |

| RandomAccessFile |

| SequenceInputStream |

DataOutputStream, in particular, is used without change, so for storing and retrieving data in a transportable format, you use the InputStream and OutputStream hierarchies.

12-5. Off by itself: RandomAccessFile▲

RandomAccessFile is used for files containing records of known size so that you can move from one record to another using seek( ), then read or change the records. The records don't have to be the same size; you just have to be able to determine how big they are and where they are placed in the file.

At first it's a little bit hard to believe that RandomAccessFile is not part of the InputStream or OutputStream hierarchy. However, it has no association with those hierarchies other than that it happens to implement the DataInput and DataOutput interfaces (which are also implemented by DataInputStream and DataOutputStream). It doesn't even use any of the functionality of the existing InputStream or OutputStream classes; it's a completely separate class, written from scratch, with all of its own (mostly native) methods. The reason for this may be that RandomAccessFile has essentially different behavior than the other I/O types, since you can move forward and backward within a file. In any event, it stands alone, as a direct descendant of Object.

Essentially, a RandomAccessFile works like a DataInputStream pasted together with a DataOutputStream, along with the methods getFilePointer( ) to find out where you are in the file, seek( ) to move to a new point in the file, and length( ) to determine the maximum size of the file. In addition, the constructors require a second argument (identical to fopen( ) in C) indicating whether you are just randomly reading ("r") or reading and writing ("rw"). There's no support for write-only files, which could suggest that RandomAccessFile might have worked well if it were inherited from DataInputStream.

The seeking methods are available only in RandomAccessFile, which works for files only. BufferedInputStream does allow you to mark( ) a position (whose value is held in a single internal variable) and reset( ) to that position, but this is limited and not very useful.

Most, if not all, of the RandomAccessFile functionality is superceded in JDK 1.4 with the nio memory-mapped files, which will be described later in this chapter.

12-6. Typical uses of I/O streams▲

Although you can combine the I/O stream classes in many different ways, you'll probably just use a few combinations. The following example can be used as a basic reference; it shows the creation and use of typical I/O configurations. Note that each configuration begins with a commented number and title that corresponds to the heading for the appropriate explanation that follows in the text.

//: c12:IOStreamDemo.java

// Typical I/O stream configurations.

// {RunByHand}

// {Clean: IODemo.out,Data.txt,rtest.dat}

import com.bruceeckel.simpletest.*;

import java.io.*;

public class IOStreamDemo {

private static Test monitor = new Test();

// Throw exceptions to console:

public static void main(String[] args)

throws IOException {

// 1. Reading input by lines:

BufferedReader in = new BufferedReader(

new FileReader("IOStreamDemo.java"));

String s, s2 = new String();

while((s = in.readLine())!= null)

s2 += s + "\n";

in.close();

// 1b. Reading standard input:

BufferedReader stdin = new BufferedReader(

new InputStreamReader(System.in));

System.out.print("Enter a line:");

System.out.println(stdin.readLine());

// 2. Input from memory

StringReader in2 = new StringReader(s2);

int c;

while((c = in2.read()) != -1)

System.out.print((char)c);

// 3. Formatted memory input

try {

DataInputStream in3 = new DataInputStream(

new ByteArrayInputStream(s2.getBytes()));

while(true)

System.out.print((char)in3.readByte());

} catch(EOFException e) {

System.err.println("End of stream");

}

// 4. File output

try {

BufferedReader in4 = new BufferedReader(

new StringReader(s2));

PrintWriter out1 = new PrintWriter(

new BufferedWriter(new FileWriter("IODemo.out")));

int lineCount = 1;

while((s = in4.readLine()) != null )

out1.println(lineCount++ + ": " + s);

out1.close();

} catch(EOFException e) {

System.err.println("End of stream");

}

// 5. Storing & recovering data

try {

DataOutputStream out2 = new DataOutputStream(

new BufferedOutputStream(

new FileOutputStream("Data.txt")));

out2.writeDouble(3.14159);

out2.writeUTF("That was pi");

out2.writeDouble(1.41413);

out2.writeUTF("Square root of 2");

out2.close();

DataInputStream in5 = new DataInputStream(

new BufferedInputStream(

new FileInputStream("Data.txt")));

// Must use DataInputStream for data:

System.out.println(in5.readDouble());

// Only readUTF() will recover the

// Java-UTF String properly:

System.out.println(in5.readUTF());

// Read the following double and String:

System.out.println(in5.readDouble());

System.out.println(in5.readUTF());

} catch(EOFException e) {

throw new RuntimeException(e);

}

// 6. Reading/writing random access files

RandomAccessFile rf =

new RandomAccessFile("rtest.dat", "rw");

for(int i = 0; i < 10; i++)

rf.writeDouble(i*1.414);

rf.close();

rf = new RandomAccessFile("rtest.dat", "rw");

rf.seek(5*8);

rf.writeDouble(47.0001);

rf.close();

rf = new RandomAccessFile("rtest.dat", "r");

for(int i = 0; i < 10; i++)

System.out.println("Value " + i + ": " +

rf.readDouble());

rf.close();

monitor.expect("IOStreamDemo.out");

}

} ///:~

Here are the descriptions for the numbered sections of the program:

12-6-1. Input streams▲

Parts 1 through 4 demonstrate the creation and use of input streams. Part 4 also shows the simple use of an output stream.

12-6-1-1. Buffered input file (1)▲

To open a file for character input, you use a FileInputReader with a String or a File object as the file name. For speed, you'll want that file to be buffered so you give the resulting reference to the constructor for a BufferedReader. Since BufferedReader also provides the readLine( ) method, this is your final object and the interface you read from. When you reach the end of the file, readLine( ) returns null so that is used to break out of the while loop.

The String s2 is used to accumulate the entire contents of the file (including newlines that must be added since readLine( ) strips them off). s2 is then used in the later portions of this program. Finally, close( ) is called to close the file. Technically, close( ) will be called when finalize( ) runs, and this is supposed to happen (whether or not garbage collection occurs) as the program exits. However, this has been inconsistently implemented, so the only safe approach is to explicitly call close( ) for files.

Section 1b shows how you can wrap System.in for reading console input. System.in is an InputStream, and BufferedReader needs a Reader argument, so InputStreamReader is brought in to perform the adaptation.

12-6-1-2. Input from memory (2)▲

This section takes the String s2 that now contains the entire contents of the file and uses it to create a StringReader. Then read( ) is used to read each character one at a time and send it out to the console. Note that read( ) returns the next byte as an int and thus it must be cast to a char to print properly.

12-6-1-3. Formatted memory input (3)▲

To read "formatted" data, you use a DataInputStream, which is a byte-oriented I/O class (rather than char-oriented). Thus you must use all InputStream classes rather than Reader classes. Of course, you can read anything (such as a file) as bytes using InputStream classes, but here a String is used. To convert the String to an array of bytes, which is what is appropriate for a ByteArrayInputStream, String has a getBytes( ) method to do the job. At that point, you have an appropriate InputStream to hand to DataInputStream.

If you read the characters from a DataInputStream one byte at a time using readByte( ), any byte value is a legitimate result, so the return value cannot be used to detect the end of input. Instead, you can use the available( ) method to find out how many more characters are available. Here's an example that shows how to read a file one byte at a time:

//: c12:TestEOF.java

// Testing for end of file while reading a byte at a time.

import java.io.*;

public class TestEOF {

// Throw exceptions to console:

public static void main(String[] args)

throws IOException {

DataInputStream in = new DataInputStream(

new BufferedInputStream(

new FileInputStream("TestEOF.java")));

while(in.available() != 0)

System.out.print((char)in.readByte());

}

} ///:~

Note that available( ) works differently depending on what sort of medium you're reading from; it's literally "the number of bytes that can be read without blocking." With a file, this means the whole file, but with a different kind of stream this might not be true, so use it thoughtfully.

You could also detect the end of input in cases like these by catching an exception. However, the use of exceptions for control flow is considered a misuse of that feature.

12-6-1-4. File output (4)▲

This example also shows how to write data to a file. First, a FileWriter is created to connect to the file. You'll virtually always want to buffer the output by wrapping it in a BufferedWriter (try removing this wrapping to see the impact on the performance-buffering tends to dramatically increase performance of I/O operations). Then for the formatting it's turned into a PrintWriter. The data file created this way is readable as an ordinary text file.

As the lines are written to the file, line numbers are added. Note that LineNumberInputStream is not used, because it's a silly class and you don't need it. As shown here, it's trivial to keep track of your own line numbers.

When the input stream is exhausted, readLine( ) returns null. You'll see an explicit close( ) for out1, because if you don't call close( ) for all your output files, you might discover that the buffers don't get flushed, so they're incomplete.

12-6-2. Output streams▲

The two primary kinds of output streams are separated by the way they write data; one writes it for human consumption, and the other writes it to be reacquired by a DataInputStream. The RandomAccessFile stands alone, although its data format is compatible with the DataInputStream and DataOutputStream.

12-6-2-1. Storing and recovering data (5)▲

A PrintWriter formats data so that it's readable by a human. However, to output data for recovery by another stream, you use a DataOutputStream to write the data and a DataInputStream to recover the data. Of course, these streams could be anything, but here a file is used, buffered for both reading and writing. DataOutputStream and DataInputStream are byte-oriented and thus require the InputStreams and OutputStreams.

If you use a DataOutputStream to write the data, then Java guarantees that you can accurately recover the data using a DataInputStream-regardless of what different platforms write and read the data. This is incredibly valuable, as anyone knows who has spent time worrying about platform-specific data issues. That problem vanishes if you have Java on both platforms. (63)

When using a DataOutputStream, the only reliable way to write a String so that it can be recovered by a DataInputStream is to use UTF-8 encoding, accomplished in section 5 of the example using writeUTF( ) and readUTF( ). UTF-8 is a variation on Unicode, which stores all characters in two bytes. If you're working with ASCII or mostly ASCII characters (which occupy only seven bits), this is a tremendous waste of space and/or bandwidth, so UTF-8 encodes ASCII characters in a single byte, and non-ASCII characters in two or three bytes. In addition, the length of the string is stored in the first two bytes. However, writeUTF( ) and readUTF( ) use a special variation of UTF-8 for Java (which is completely described in the JDK documentation for those methods) , so if you read a string written with writeUTF( ) using a non-Java program, you must write special code in order to read the string properly.

With writeUTF( ) and readUTF( ), you can intermingle Strings and other types of data using a DataOutputStream with the knowledge that the Strings will be properly stored as Unicode, and will be easily recoverable with a DataInputStream.

The writeDouble( ) stores the double number to the stream and the complementary readDouble( ) recovers it (there are similar methods for reading and writing the other types). But for any of the reading methods to work correctly, you must know the exact placement of the data item in the stream, since it would be equally possible to read the stored double as a simple sequence of bytes, or as a char, etc. So you must either have a fixed format for the data in the file, or extra information must be stored in the file that you parse to determine where the data is located. Note that object serialization (described later in this chapter) may be an easier way to store and retrieve complex data structures.

12-6-2-2. Reading and writing random access files (6)▲

As previously noted, the RandomAccessFile is almost totally isolated from the rest of the I/O hierarchy, save for the fact that it implements the DataInput and DataOutput interfaces. So you cannot combine it with any of the aspects of the InputStream and OutputStream subclasses. Even though it might make sense to treat a ByteArrayInputStream as a random-access element, you can use RandomAccessFile only to open a file. You must assume a RandomAccessFile is properly buffered since you cannot add that.

The one option you have is in the second constructor argument: you can open a RandomAccessFile to read ("r") or read and write ("rw").

Using a RandomAccessFile is like using a combined DataInputStream and DataOutputStream (because it implements the equivalent interfaces). In addition, you can see that seek( ) is used to move about in the file and change one of the values.

With the advent of new I/O in JDK 1.4, you may want to consider using memory-mapped files instead of RandomAccessFile.

12-6-3. Piped streams▲

The PipedInputStream, PipedOutputStream, PipedReader and PipedWriter have been mentioned only briefly in this chapter. This is not to suggest that they aren't useful, but their value is not apparent until you begin to understand multithreading, since the piped streams are used to communicate between threads. This is covered along with an example in Chapter 13.

12-7. File reading & writing utilities▲

A very common programming task is to read a file into memory, modify it, and then write it out again. One of the problems with the Java I/O library is that it requires you to write quite a bit of code in order to perform these common operations-there are no basic helper function to do them for you. What's worse, the decorators make it rather hard to remember how to open files. Thus, it makes sense to add helper classes to your library that will easily perform these basic tasks for you. Here's one that contains static methods to read and write text files as a single string. In addition, you can create a TextFile class that holds the lines of the file in an ArrayList (so you have all the ArrayList functionality available while manipulating the file contents):

//: com:bruceeckel:util:TextFile.java

// Static functions for reading and writing text files as

// a single string, and treating a file as an ArrayList.

// {Clean: test.txt test2.txt}

package com.bruceeckel.util;

import java.io.*;

import java.util.*;

public class TextFile extends ArrayList {

// Tools to read and write files as single strings:

public static String

read(String fileName) throws IOException {

StringBuffer sb = new StringBuffer();

BufferedReader in =

new BufferedReader(new FileReader(fileName));

String s;

while((s = in.readLine()) != null) {

sb.append(s);

sb.append("\n");

}

in.close();

return sb.toString();

}

public static void

write(String fileName, String text) throws IOException {

PrintWriter out = new PrintWriter(

new BufferedWriter(new FileWriter(fileName)));

out.print(text);

out.close();

}

public TextFile(String fileName) throws IOException {

super(Arrays.asList(read(fileName).split("\n")));

}

public void write(String fileName) throws IOException {

PrintWriter out = new PrintWriter(

new BufferedWriter(new FileWriter(fileName)));

for(int i = 0; i < size(); i++)

out.println(get(i));

out.close();

}

// Simple test:

public static void main(String[] args) throws Exception {

String file = read("TextFile.java");

write("test.txt", file);

TextFile text = new TextFile("test.txt");

text.write("test2.txt");

}

} ///:~

All methods simply pass IOExceptions out to the caller. read( ) appends each line to a StringBuffer (for efficiency) followed by a newline, because that is stripped out during reading. Then it returns a String containing the whole file. Write( ) opens and writes the text to the file. Both methods remember to close( ) the file when they are done.

The constructor uses the read( ) method to turn the file into a String, then uses String.split( ) to divide the result into lines along newline boundaries (if you use this class a lot, you may want to rewrite this constructor to improve efficiency). Alas, there is no corresponding "join" method, so the non-static write( ) method must write the lines out by hand.

In main( ), a basic test is performed to ensure that the methods work. Although this is a small amount of code, using it can save a lot of time and make your life easier, as you'll see in some of the examples later in this chapter.

12-8. Standard I/O▲

The term standard I/O refers to the Unix concept (which is reproduced in some form in Windows and many other operating systems) of a single stream of information that is used by a program. All the program's input can come from standard input, all its output can go to standard output, and all of its error messages can be sent to standard error. The value of standard I/O is that programs can easily be chained together, and one program's standard output can become the standard input for another program. This is a powerful tool.

12-8-1. Reading from standard input▲

Following the standard I/O model, Java has System.in, System.out, and System.err. Throughout this book, you've seen how to write to standard output using System.out, which is already prewrapped as a PrintStream object. System.err is likewise a PrintStream, but System.in is a raw InputStream with no wrapping. This means that although you can use System.out and System.err right away, System.in must be wrapped before you can read from it.

Typically, you'll want to read input a line at a time using readLine( ), so you'll want to wrap System.in in a BufferedReader. To do this, you must convert System.in to a Reader using InputStreamReader. Here's an example that simply echoes each line that you type in:

//: c12:Echo.java

// How to read from standard input.

// {RunByHand}

import java.io.*;

public class Echo {

public static void main(String[] args)

throws IOException {

BufferedReader in = new BufferedReader(

new InputStreamReader(System.in));

String s;

while((s = in.readLine()) != null && s.length() != 0)

System.out.println(s);

// An empty line or Ctrl-Z terminates the program

}

} ///:~

The reason for the exception specification is that readLine( ) can throw an IOException. Note that System.in should usually be buffered, as with most streams.

12-8-2. Changing System.out to a PrintWriter▲

System.out is a PrintStream, which is an OutputStream. PrintWriter has a constructor that takes an OutputStream as an argument. Thus, if you want, you can convert System.out into a PrintWriter using that constructor:

//: c12:ChangeSystemOut.java

// Turn System.out into a PrintWriter.

import com.bruceeckel.simpletest.*;

import java.io.*;

public class ChangeSystemOut {

private static Test monitor = new Test();

public static void main(String[] args) {

PrintWriter out = new PrintWriter(System.out, true);

out.println("Hello, world");

monitor.expect(new String[] {

"Hello, world"

});

}

} ///:~

It's important to use the two-argument version of the PrintWriter constructor and to set the second argument to true in order to enable automatic flushing; otherwise, you may not see the output.

12-8-3. Redirecting standard I/O▲

The Java System class allows you to redirect the standard input, output, and error I/O streams using simple static method calls:

setIn(InputStream)

setOut(PrintStream)

setErr(PrintStream)

Redirecting output is especially useful if you suddenly start creating a large amount of output on your screen, and it's scrolling past faster than you can read it. (64) Redirecting input is valuable for a command-line program in which you want to test a particular user-input sequence repeatedly. Here's a simple example that shows the use of these methods:

//: c12:Redirecting.java

// Demonstrates standard I/O redirection.

// {Clean: test.out}

import java.io.*;

public class Redirecting {

// Throw exceptions to console:

public static void main(String[] args)

throws IOException {

PrintStream console = System.out;

BufferedInputStream in = new BufferedInputStream(

new FileInputStream("Redirecting.java"));

PrintStream out = new PrintStream(

new BufferedOutputStream(

new FileOutputStream("test.out")));

System.setIn(in);

System.setOut(out);

System.setErr(out);

BufferedReader br = new BufferedReader(

new InputStreamReader(System.in));

String s;

while((s = br.readLine()) != null)

System.out.println(s);

out.close(); // Remember this!

System.setOut(console);

}

} ///:~

This program attaches standard input to a file and redirects standard output and standard error to another file.

I/O redirection manipulates streams of bytes, not streams of characters, thus InputStreams and OutputStreams are used rather than Readers and Writers.

12-9. New I/O▲

The Java "new" I/O library, introduced in JDK 1.4 in the java.nio.* packages, has one goal: speed. In fact, the "old" I/O packages have been reimplemented using nio in order to take advantage of this speed increase, so you will benefit even if you don't explicitly write code with nio. The speed increase occurs in both file I/O, which is explored here, (65) and in network I/O, which is covered in Thinking in Enterprise Java.

The speed comes from using structures that are closer to the operating system's way of performing I/O: channels and buffers. You could think of it as a coal mine; the channel is the mine containing the seam of coal (the data), and the buffer is the cart that you send into the mine. The cart comes back full of coal, and you get the coal from the cart. That is, you don't interact directly with the channel; you interact with the buffer and send the buffer into the channel. The channel either pulls data from the buffer, or puts data into the buffer.

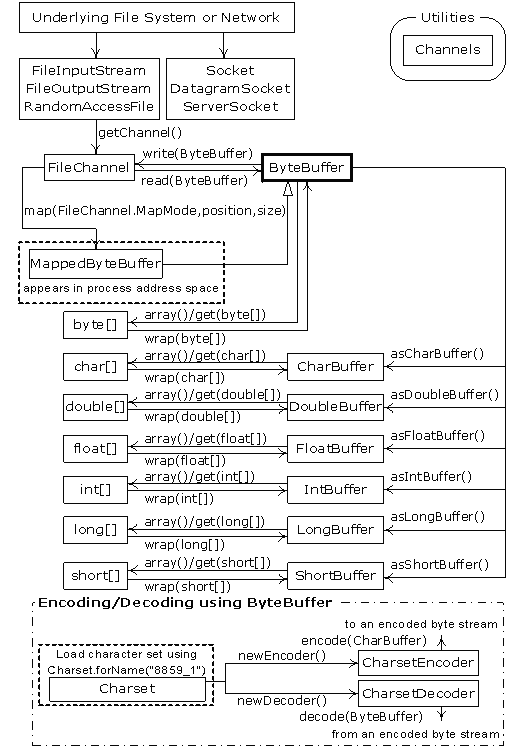

The only kind of buffer that communicates directly with a channel is a ByteBuffer-that is, a buffer that holds raw bytes. If you look at the JDK documentation for java.nio.ByteBuffer, you'll see that it's fairly basic: You create one by telling it how much storage to allocate, and there are a selection of methods to put and get data, in either raw byte form or as primitive data types. But there's no way to put or get an object, or even a String. It's fairly low-level, precisely because this makes a more efficient mapping with most operating systems.

Three of the classes in the "old" I/O have been modified so that they produce a FileChannel: FileInputStream, FileOutputStream, and, for both reading and writing, RandomAccessFile. Notice that these are the byte manipulation streams, in keeping with the low-level nature of nio. The Reader and Writer character-mode classes do not produce channels, but the class java.nio.channels.Channels has utility methods to produce Readers and Writers from channels.

Here's a simple example that exercises all three types of stream to produce channels that are writeable, read/writeable, and readable:

//: c12:GetChannel.java

// Getting channels from streams

// {Clean: data.txt}

import java.io.*;

import java.nio.*;

import java.nio.channels.*;

public class GetChannel {

private static final int BSIZE = 1024;

public static void main(String[] args) throws Exception {

// Write a file:

FileChannel fc =

new FileOutputStream("data.txt").getChannel();

fc.write(ByteBuffer.wrap("Some text ".getBytes()));

fc.close();

// Add to the end of the file:

fc =

new RandomAccessFile("data.txt", "rw").getChannel();

fc.position(fc.size()); // Move to the end

fc.write(ByteBuffer.wrap("Some more".getBytes()));

fc.close();

// Read the file:

fc = new FileInputStream("data.txt").getChannel();

ByteBuffer buff = ByteBuffer.allocate(BSIZE);

fc.read(buff);

buff.flip();

while(buff.hasRemaining())

System.out.print((char)buff.get());

}

} ///:~

For any of the stream classes shown here, getChannel( ) will produce a FileChannel. A channel is fairly basic: You can hand it a ByteBuffer for reading or writing, and you can lock regions of the file for exclusive access (this will be described later).

One way to put bytes into a ByteBuffer is to stuff them in directly using one of the "put" methods, to put one or more bytes, or values of primitive types. However, as seen here, you can also "wrap" an existing byte array in a ByteBuffer using the wrap( ) method. When you do this, the underlying array is not copied, but instead is used as the storage for the generated ByteBuffer. We say that the ByteBuffer is "backed by" the array.

The data.txt file is reopened using a RandomAccessFile. Notice that you can move the FileChannel around in the file; here, it is moved to the end so that additional writes will be appended.

For read-only access, you must explicitly allocate a ByteBuffer using the static allocate( ) method. The goal of nio is to rapidly move large amounts of data, so the size of the ByteBuffer should be significant-in fact, the 1K used here is probably quite a bit smaller than you'd normally want to use (you'll have to experiment with your working application to find the best size).

It's also possible to go for even more speed by using allocateDirect( ) instead of allocate( ) to produce a "direct" buffer that may have an even higher coupling with the operating system. However, the overhead in such an allocation is greater, and the actual implementation varies from one operating system to another, so again, you must experiment with your working application to discover whether direct buffers will buy you any advantage in speed.

Once you call read( ) to tell the FileChannel to store bytes into the ByteBuffer, you must call flip( ) on the buffer to tell it to get ready to have its bytes extracted (yes, this seems a bit crude, but remember that it's very low-level and is done for maximum speed). And if we were to use the buffer for further read( ) operations, we'd also have to call clear( ) to prepare it for each read( ). You can see this in a simple file copying program:

//: c12:ChannelCopy.java

// Copying a file using channels and buffers

// {Args: ChannelCopy.java test.txt}

// {Clean: test.txt}

import java.io.*;

import java.nio.*;

import java.nio.channels.*;

public class ChannelCopy {

private static final int BSIZE = 1024;

public static void main(String[] args) throws Exception {

if(args.length != 2) {

System.out.println("arguments: sourcefile destfile");

System.exit(1);

}

FileChannel

in = new FileInputStream(args[0]).getChannel(),

out = new FileOutputStream(args[1]).getChannel();

ByteBuffer buffer = ByteBuffer.allocate(BSIZE);

while(in.read(buffer) != -1) {

buffer.flip(); // Prepare for writing

out.write(buffer);

buffer.clear(); // Prepare for reading

}

}

} ///:~

You can see that one FileChannel is opened for reading, and one for writing. A ByteBuffer is allocated, and when FileChannel.read( ) returns -1 (a holdover, no doubt, from Unix and C), it means that you've reached the end of the input. After each read( ), which puts data into the buffer, flip( ) prepares the buffer so that its information can be extracted by the write( ). After the write( ), the information is still in the buffer, and clear( ) resets all the internal pointers so that it's ready to accept data during another read( ).

The preceding program is not the ideal way to handle this kind of operation, however. Special methods transferTo( ) and transferFrom( ) allow you to connect one channel directly to another:

//: c12:TransferTo.java

// Using transferTo() between channels

// {Args: TransferTo.java TransferTo.txt}

// {Clean: TransferTo.txt}

import java.io.*;

import java.nio.*;

import java.nio.channels.*;

public class TransferTo {

public static void main(String[] args) throws Exception {

if(args.length != 2) {

System.out.println("arguments: sourcefile destfile");

System.exit(1);

}

FileChannel

in = new FileInputStream(args[0]).getChannel(),

out = new FileOutputStream(args[1]).getChannel();

in.transferTo(0, in.size(), out);

// Or:

// out.transferFrom(in, 0, in.size());

}

} ///:~

You won't do this kind of thing very often, but it's good to know about.

12-9-1. Converting data▲

If you look back at GetChannel.java, you'll notice that, to print the information in the file, we are pulling the data out one byte at a time and casting each byte to a char. This seems a bit primitive-if you look at the java.nio.CharBuffer class, you'll see that it has a toString( ) method that says: "Returns a string containing the characters in this buffer." Since a ByteBuffer can be viewed as a CharBuffer with the asCharBuffer( ) method, why not use that? As you can see from the first line in the expect( ) statement below, this doesn't work out:

//: c12:BufferToText.java

// Converting text to and from ByteBuffers

// {Clean: data2.txt}

import java.io.*;

import java.nio.*;

import java.nio.channels.*;

import java.nio.charset.*;

import com.bruceeckel.simpletest.*;

public class BufferToText {

private static Test monitor = new Test();

private static final int BSIZE = 1024;

public static void main(String[] args) throws Exception {

FileChannel fc =

new FileOutputStream("data2.txt").getChannel();

fc.write(ByteBuffer.wrap("Some text".getBytes()));

fc.close();

fc = new FileInputStream("data2.txt").getChannel();

ByteBuffer buff = ByteBuffer.allocate(BSIZE);

fc.read(buff);

buff.flip();

// Doesn't work:

System.out.println(buff.asCharBuffer());

// Decode using this system's default Charset:

buff.rewind();

String encoding = System.getProperty("file.encoding");

System.out.println("Decoded using " + encoding + ": "

+ Charset.forName(encoding).decode(buff));

// Or, we could encode with something that will print:

fc = new FileOutputStream("data2.txt").getChannel();

fc.write(ByteBuffer.wrap(

"Some text".getBytes("UTF-16BE")));

fc.close();

// Now try reading again:

fc = new FileInputStream("data2.txt").getChannel();

buff.clear();

fc.read(buff);

buff.flip();

System.out.println(buff.asCharBuffer());

// Use a CharBuffer to write through:

fc = new FileOutputStream("data2.txt").getChannel();

buff = ByteBuffer.allocate(24); // More than needed

buff.asCharBuffer().put("Some text");

fc.write(buff);

fc.close();

// Read and display:

fc = new FileInputStream("data2.txt").getChannel();

buff.clear();

fc.read(buff);

buff.flip();

System.out.println(buff.asCharBuffer());

monitor.expect(new String[] {

"????",

"%% Decoded using [A-Za-z0-9_\\-]+: Some text",

"Some text",

"Some text\0\0\0"

});

}

} ///:~

The buffer contains plain bytes, and to turn these into characters we must either encode them as we put them in (so that they will be meaningful when they come out) or decode them as they come out of the buffer. This can be accomplished using the java.nio.charset.Charset class, which provides tools for encoding into many different types of character sets:

//: c12:AvailableCharSets.java

// Displays Charsets and aliases

import java.nio.charset.*;

import java.util.*;

import com.bruceeckel.simpletest.*;

public class AvailableCharSets {

private static Test monitor = new Test();

public static void main(String[] args) {

Map charSets = Charset.availableCharsets();

Iterator it = charSets.keySet().iterator();

while(it.hasNext()) {

String csName = (String)it.next();

System.out.print(csName);

Iterator aliases = ((Charset)charSets.get(csName))

.aliases().iterator();

if(aliases.hasNext())

System.out.print(": ");

while(aliases.hasNext()) {

System.out.print(aliases.next());

if(aliases.hasNext())

System.out.print(", ");

}

System.out.println();

}

monitor.expect(new String[] {

"Big5: csBig5",

"Big5-HKSCS: big5-hkscs, Big5_HKSCS, big5hkscs",

"EUC-CN",

"EUC-JP: eucjis, x-eucjp, csEUCPkdFmtjapanese, " +

"eucjp, Extended_UNIX_Code_Packed_Format_for" +

"_Japanese, x-euc-jp, euc_jp",

"euc-jp-linux: euc_jp_linux",

"EUC-KR: ksc5601, 5601, ksc5601_1987, ksc_5601, " +

"ksc5601-1987, euc_kr, ks_c_5601-1987, " +

"euckr, csEUCKR",

"EUC-TW: cns11643, euc_tw, euctw",

"GB18030: gb18030-2000",

"GBK: GBK",

"ISCII91: iscii, ST_SEV_358-88, iso-ir-153, " +

"csISO153GOST1976874",

"ISO-2022-CN-CNS: ISO2022CN_CNS",

"ISO-2022-CN-GB: ISO2022CN_GB",

"ISO-2022-KR: ISO2022KR, csISO2022KR",

"ISO-8859-1: iso-ir-100, 8859_1, ISO_8859-1, " +

"ISO8859_1, 819, csISOLatin1, IBM-819, " +

"ISO_8859-1:1987, latin1, cp819, ISO8859-1, " +

"IBM819, ISO_8859_1, l1",

"ISO-8859-13",

"ISO-8859-15: 8859_15, csISOlatin9, IBM923, cp923," +

" 923, L9, IBM-923, ISO8859-15, LATIN9, " +

"ISO_8859-15, LATIN0, csISOlatin0, " +

"ISO8859_15_FDIS, ISO-8859-15",

"ISO-8859-2", "ISO-8859-3", "ISO-8859-4",

"ISO-8859-5", "ISO-8859-6", "ISO-8859-7",

"ISO-8859-8", "ISO-8859-9",

"JIS0201: X0201, JIS_X0201, csHalfWidthKatakana",

"JIS0208: JIS_C6626-1983, csISO87JISX0208, x0208, " +

"JIS_X0208-1983, iso-ir-87",

"JIS0212: jis_x0212-1990, x0212, iso-ir-159, " +

"csISO159JISC02121990",

"Johab: ms1361, ksc5601_1992, ksc5601-1992",

"KOI8-R",

"Shift_JIS: shift-jis, x-sjis, ms_kanji, " +

"shift_jis, csShiftJIS, sjis, pck",

"TIS-620",

"US-ASCII: IBM367, ISO646-US, ANSI_X3.4-1986, " +

"cp367, ASCII, iso_646.irv:1983, 646, us, iso-ir-6,"+

" csASCII, ANSI_X3.4-1968, ISO_646.irv:1991",

"UTF-16: UTF_16",

"UTF-16BE: X-UTF-16BE, UTF_16BE, ISO-10646-UCS-2",

"UTF-16LE: UTF_16LE, X-UTF-16LE",

"UTF-8: UTF8", "windows-1250", "windows-1251",

"windows-1252: cp1252",

"windows-1253", "windows-1254", "windows-1255",

"windows-1256", "windows-1257", "windows-1258",

"windows-936: ms936, ms_936",

"windows-949: ms_949, ms949", "windows-950: ms950",

});

}

} ///:~

So, returning to BufferToText.java, if you rewind( ) the buffer (to go back to the beginning of the data) and then use that platform's default character set to decode( ) the data, the resulting CharBuffer will print to the console just fine. To discover the default character set, use System.getProperty("file.encoding"), which produces the string that names the character set. Passing this to Charset.forName( ) produces the Charset object that can be used to decode the string.

Another alternative is to encode( ) using a character set that will result in something printable when the file is read, as you see in the third part of BufferToText.java. Here, UTF-16BE is used to write the text into the file, and when it is read, all you have to do is convert it to a CharBuffer, and it produces the expected text.

Finally, you see what happens if you write to the ByteBuffer through a CharBuffer (you'll learn more about this later). Note that 24 bytes are allocated for the ByteBuffer. Since each char requires two bytes, this is enough for 12 chars, but "Some text" only has 9. The remaining zero bytes still appear in the representation of the CharBuffer produced by its toString( ), as you can see in the output.

12-9-2. Fetching primitives▲

Although a ByteBuffer only holds bytes, it contains methods to produce each of the different types of primitive values from the bytes it contains. This example shows the insertion and extraction of various values using these methods:

//: c12:GetData.java

// Getting different representations from a ByteBuffer

import java.nio.*;

import com.bruceeckel.simpletest.*;

public class GetData {

private static Test monitor = new Test();

private static final int BSIZE = 1024;

public static void main(String[] args) {

ByteBuffer bb = ByteBuffer.allocate(BSIZE);

// Allocation automatically zeroes the ByteBuffer:

int i = 0;

while(i++ < bb.limit())

if(bb.get() != 0)

System.out.println("nonzero");

System.out.println("i = " + i);

bb.rewind();

// Store and read a char array:

bb.asCharBuffer().put("Howdy!");

char c;

while((c = bb.getChar()) != 0)

System.out.print(c + " ");

System.out.println();

bb.rewind();

// Store and read a short:

bb.asShortBuffer().put((short)471142);

System.out.println(bb.getShort());

bb.rewind();

// Store and read an int:

bb.asIntBuffer().put(99471142);

System.out.println(bb.getInt());

bb.rewind();

// Store and read a long:

bb.asLongBuffer().put(99471142);

System.out.println(bb.getLong());

bb.rewind();

// Store and read a float:

bb.asFloatBuffer().put(99471142);

System.out.println(bb.getFloat());

bb.rewind();

// Store and read a double:

bb.asDoubleBuffer().put(99471142);

System.out.println(bb.getDouble());

bb.rewind();

monitor.expect(new String[] {

"i = 1025",

"H o w d y ! ",

"12390", // Truncation changes the value

"99471142",

"99471142",

"9.9471144E7",

"9.9471142E7"

});

}

} ///:~

After a ByteBuffer is allocated, its values are checked to see whether buffer allocation automatically zeroes the contents-and it does. All 1,024 values are checked (up to the limit( ) of the buffer), and all are zero.

The easiest way to insert primitive values into a ByteBuffer is to get the appropriate "view" on that buffer using asCharBuffer( ), asShortBuffer( ), etc., and then to use that view's put( ) method. You can see this is the process used for each of the primitive data types. The only one of these that is a little odd is the put( ) for the ShortBuffer, which requires a cast (note that the cast truncates and changes the resulting value). All the other view buffers do not require casting in their put( ) methods.

12-9-3. View buffers▲

A "view buffer" allows you to look at an underlying ByteBuffer through the window of a particular primitive type. The ByteBuffer is still the actual storage that's "backing" the view, so any changes you make to the view are reflected in modifications to the data in the ByteBuffer. As seen in the previous example, this allows you to conveniently insert primitive types into a ByteBuffer. A view also allows you to read primitive values from a ByteBuffer, either one at a time (as ByteBuffer allows) or in batches (into arrays). Here's an example that manipulates ints in a ByteBuffer via an IntBuffer:

//: c12:IntBufferDemo.java

// Manipulating ints in a ByteBuffer with an IntBuffer

import java.nio.*;

import com.bruceeckel.simpletest.*;

import com.bruceeckel.util.*;

public class IntBufferDemo {

private static Test monitor = new Test();

private static final int BSIZE = 1024;

public static void main(String[] args) {

ByteBuffer bb = ByteBuffer.allocate(BSIZE);

IntBuffer ib = bb.asIntBuffer();

// Store an array of int:

ib.put(new int[] { 11, 42, 47, 99, 143, 811, 1016 });

// Absolute location read and write:

System.out.println(ib.get(3));

ib.put(3, 1811);

ib.rewind();

while(ib.hasRemaining()) {

int i = ib.get();

if(i == 0) break; // Else we'll get the entire buffer

System.out.println(i);

}

monitor.expect(new String[] {

"99",

"11",

"42",

"47",

"1811",

"143",

"811",

"1016"

});

}

} ///:~

The overloaded put( ) method is first used to store an array of int. The following get( ) and put( ) method calls directly access an int location in the underlying ByteBuffer. Note that these absolute location accesses are available for primitive types by talking directly to a ByteBuffer, as well.

Once the underlying ByteBuffer is filled with ints or some other primitive type via a view buffer, then that ByteBuffer can be written directly to a channel. You can just as easily read from a channel and use a view buffer to convert everything to a particular type of primitive. Here's an example that interprets the same sequence of bytes as short, int, float, long, and double by producing different view buffers on the same ByteBuffer:

//: c12:ViewBuffers.java

import java.nio.*;

import com.bruceeckel.simpletest.*;

public class ViewBuffers {

private static Test monitor = new Test();

public static void main(String[] args) {

ByteBuffer bb = ByteBuffer.wrap(

new byte[]{ 0, 0, 0, 0, 0, 0, 0, 'a' });

bb.rewind();

System.out.println("Byte Buffer");

while(bb.hasRemaining())

System.out.println(bb.position()+ " -> " + bb.get());

CharBuffer cb =

((ByteBuffer)bb.rewind()).asCharBuffer();

System.out.println("Char Buffer");

while(cb.hasRemaining())

System.out.println(cb.position()+ " -> " + cb.get());

FloatBuffer fb =

((ByteBuffer)bb.rewind()).asFloatBuffer();

System.out.println("Float Buffer");

while(fb.hasRemaining())

System.out.println(fb.position()+ " -> " + fb.get());

IntBuffer ib =

((ByteBuffer)bb.rewind()).asIntBuffer();

System.out.println("Int Buffer");

while(ib.hasRemaining())

System.out.println(ib.position()+ " -> " + ib.get());

LongBuffer lb =

((ByteBuffer)bb.rewind()).asLongBuffer();

System.out.println("Long Buffer");

while(lb.hasRemaining())

System.out.println(lb.position()+ " -> " + lb.get());

ShortBuffer sb =

((ByteBuffer)bb.rewind()).asShortBuffer();

System.out.println("Short Buffer");

while(sb.hasRemaining())

System.out.println(sb.position()+ " -> " + sb.get());

DoubleBuffer db =

((ByteBuffer)bb.rewind()).asDoubleBuffer();

System.out.println("Double Buffer");

while(db.hasRemaining())

System.out.println(db.position()+ " -> " + db.get());

monitor.expect(new String[] {

"Byte Buffer",

"0 -> 0",

"1 -> 0",

"2 -> 0",

"3 -> 0",

"4 -> 0",

"5 -> 0",

"6 -> 0",

"7 -> 97",

"Char Buffer",

"0 -> \0",

"1 -> \0",

"2 -> \0",

"3 -> a",

"Float Buffer",

"0 -> 0.0",

"1 -> 1.36E-43",

"Int Buffer",

"0 -> 0",

"1 -> 97",

"Long Buffer",

"0 -> 97",

"Short Buffer",

"0 -> 0",

"1 -> 0",

"2 -> 0",

"3 -> 97",

"Double Buffer",

"0 -> 4.8E-322"

});

}

} ///:~

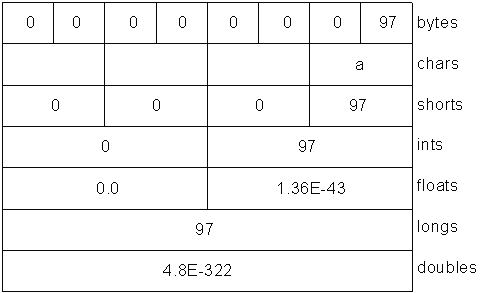

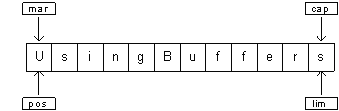

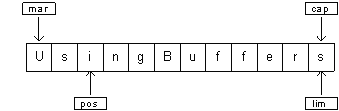

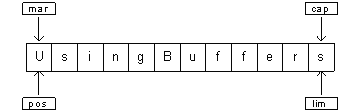

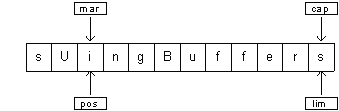

The ByteBuffer is produced by "wrapping" an eight-byte array, which is then displayed via view buffers of all the different primitive types. You can see in the following diagram the way the data appears differently when read from the different types of buffers:

This corresponds to the output from the program.

12-9-3-1. Endians▲

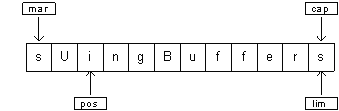

Different machines may use different byte-ordering approaches to store data. "Big endian" places the most significant byte in the lowest memory address, and "little endian" places the most significant byte in the highest memory address. When storing a quantity that is greater than one byte, like int, float, etc.,you may need to consider the byte ordering. A ByteBuffer stores data in big endian form, and data sent over a network always uses big endian order. You can change the endian-ness of a ByteBuffer using order( ) with an argument of ByteOrder.BIG_ENDIAN or ByteOrder.LITTLE_ENDIAN.

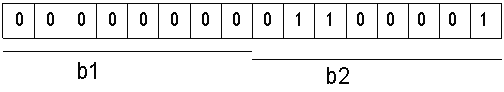

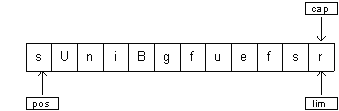

Consider a ByteBuffer containing the following two bytes:

If you read the data as a short (ByteBuffer.asShortBuffer( )), you will get the number 97 (00000000 01100001), but if you change to little endian, you will get the number 24832 (01100001 00000000).

Here's an example that shows how byte ordering is changed in characters depending on the endian setting:

//: c12:Endians.java

// Endian differences and data storage.

import java.nio.*;

import com.bruceeckel.simpletest.*;

import com.bruceeckel.util.*;

public class Endians {

private static Test monitor = new Test();

public static void main(String[] args) {

ByteBuffer bb = ByteBuffer.wrap(new byte[12]);

bb.asCharBuffer().put("abcdef");

System.out.println(Arrays2.toString(bb.array()));

bb.rewind();

bb.order(ByteOrder.BIG_ENDIAN);

bb.asCharBuffer().put("abcdef");

System.out.println(Arrays2.toString(bb.array()));

bb.rewind();

bb.order(ByteOrder.LITTLE_ENDIAN);

bb.asCharBuffer().put("abcdef");

System.out.println(Arrays2.toString(bb.array()));

monitor.expect(new String[]{

"[0, 97, 0, 98, 0, 99, 0, 100, 0, 101, 0, 102]",

"[0, 97, 0, 98, 0, 99, 0, 100, 0, 101, 0, 102]",

"[97, 0, 98, 0, 99, 0, 100, 0, 101, 0, 102, 0]"

});

}

} ///:~

The ByteBuffer is given enough space to hold all the bytes in charArray as an external buffer so that that array( ) method can be called to display the underlying bytes. The array( ) method is "optional," and you can only call it on a buffer that is backed by an array; otherwise, you'll get an UnsupportedOperationException.

charArray is inserted into the ByteBuffer via a CharBuffer view. When the underlying bytes are displayed, you can see that the default ordering is the same as the subsequent big endian order, whereas the little endian order swaps the bytes.